- 7 minute read

Legislators around the world are introducing new laws that require businesses to perform stricter online identity- and age verification to protect minors in an increasingly digitalized world. Millennials, Generation Z, and teenagers love surfing the internet. They engage and shop on social media, visit digital marketplaces and purchase goods and services on their cell phones.

Age-restricted industries such as online gaming, gambling, dating, alcohol & tobacco, adult entertainment, nutraceuticals, and pharmaceuticals already create barriers to making sure that their visitors are 18+, but traditional methods of age and ID verification often prove ineffective in stopping today’s tech-savvy youth from gaining access. This is why legislators are stepping up their game.

Legal pressure is mounting

United States of America (USA)

In the USA, the Children’s Online Privacy Protection Act (COPPA) is a federal law that affects operators and online merchants that offer services to children and companies that collect personal information from children (–13). These service providers must deny kids access to damaging content. There is an ongoing debate between those who want to tighten restrictions, those who want to change the age barrier from 13 to 16, and those who claim that age-restrictive measures are useless in today’s digitalized world.

A growing number of states are implementing new legislation to regulate age-restricted businesses. Last December, a child protection bill was introduced in the US Senate based on Louisiana’s stricter regulations. In Arkansas, businesses that do not implement strict age-verification policies could be held accountable for any damage children suffer after being exposed to adult content. In Utah, Rep. Chris Stewart (R-UT) introduced the Social Media Child Protection Act, which would make it illegal for social media platforms to provide access to children under the age of 16, based on the assumption that these platforms have caused depression, anxiety, and suicide amongst youngsters.

Examples of Age-Restricted Business:

- Alcohol, Tobacco, e-Cigarettes, and Vaping

- Online Gaming

- Online Betting and Gambling

- Pharmaceuticals

- Nutraceuticals

- Dating Services

- Adult Entertainment

- Social Media Platforms

The European Union (EU)

The European Union applies its own strategy to protect its children online, called BIK+. The EU adopted its Better Internet for Kids (BIK+) strategy in May last year. BIK+ is the digital arm of the EU Strategy on the Rights of the Child (RoC):

“Europe’s Digital Decade offers great opportunities to children, but technology can also pose risks. With the new strategy for a Better Internet for Kids, we are providing kids with the competencies and tools to navigate the digital world safely and confidently. We call upon industry to play its part in creating a safe, age-appropriate digital environment for children in respect of EU rules.”

Over half of the EU’s children spend many hours on social media platforms a week. Children are exposed to cyberbullying, harmful content, and advertisements of products and services that could potentially affect their well-being.

As part of the EU’s Digital Services Act (DSA), the Commission is planning to facilitate an EU code for age-appropriate design in accordance with the Audiovisual Media Services Directive and GDPR. The Commission is also looking into ways of integrating its age verification program into the controversial European Digital Identity wallet. By 2024, every EU member state must make a Digital Identity Wallet available to every citizen who wants one.

Then there is eIDAS. The EU Commission intends to make the cross-border e-ID a reality with eIDAS. eIDAS stands for electronic Identification, Authentication, and trust Services. For those who object to a governmental signature system, euCONSENT offers alternative biometric age verification tools, based on AI without the need to share sensitive personal data.

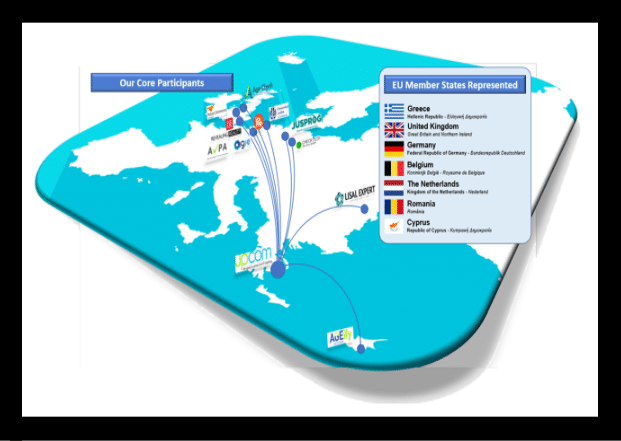

In 2022, the euCONSENT Project, an R&D initiative that united academic institutions, NGOs, and tech providers to design, deliver and pilot a new European system for digital age verification and parental consent, proved to be an overall success.

In December, a statement went out:

- The project brought together competing suppliers.

- It established an impressive advisory board of all the relevant stakeholders – global platforms such as Meta and Google, children’s charities, EU-wide trade associations, and regulatory bodies. We would like to put on record our thanks to everyone who joined this Board and gave such important advice to guide our work.

- It created new international standards and defined shared levels of assurance for age checks that allow services, regulators, and suppliers to specify the level of accuracy and certainty they require, and in turn, this facilitates interoperability.

United Kingdom (UK)

In the UK, changes to the Online Safety Bill introduce criminal liability, which will expose tech executives to legal risk if their platform fails to protect children against harmful content. In order to stay safe from legal actions by Ofom, tech firms must show how they enforce age restrictions and prove that they implement risk assessment programs.

Culture Secretary Michelle Donelan told The Telegraph: Some platforms claim that they do not allow children access but simultaneously have adverts targeting children. The legislation now compels companies to be much more explicit about how they enforce their own age limits.

This revised Online Safety Bill will apply to “companies whose services host user-generated content such as images, videos, and comments, or which allow UK users to talk with other people online through messaging, comments and forums.”:

- the biggest and most popular social media platforms.

- sites such as forums and messaging apps, some online games, cloud storage, and the most popular pornography sites.

- search engines, which play a significant role in enabling users to access harmful content.

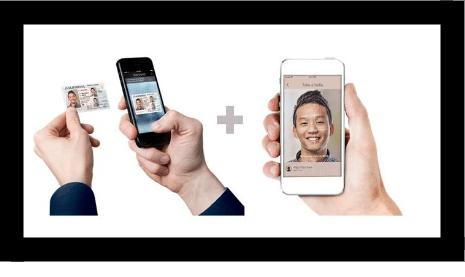

Social Media platforms are feeling the heat. In the UK, Instagram anticipated upcoming legal requirements. From November last year, anyone who tries to change their logged date of birth from -18 to 18+ will be subjected to age verification. The user must supply an ID or a video selfie that will be used to estimate the user’s age.

Growing concern about the effects of social media on children’s mental health comes at a time when Facebook and Instagram users are moving to Tik Tok, a Chinese platform that surpassed 1 billion active users last year. While TikTok successfully conquered Western markets, its success brought the company to the attention of international regulators. TikTok is facing a $29 million fine after the Information Commissioner’s Office (ICO) provisionally found that the company breached child data protection laws. According to the ICO, the Chinese company may have processed data of children (– 13) between May 2018 and July 2020 without parental consent.

Meanwhile, China has taken its own measures to protect its youth against what the ruling Communist Party officially calls “spiritual opium.” In 2019, authorities restricted minors to playing 90 minutes a day on weekdays and banned them from playing between 10PM – 8AM. Since 2021, Chinese minors are only allowed to play online games for one hour a day on Fridays, weekends, and public holidays. “Youth Mode” settings on popular apps include real-name registration, age verification tools, and facial recognition. In China’s surveillance state, The Chinese gaming giant Tencent will be using facial recognition technology as part of its new face-based Midnight Patrol system. Its first phase will be rolled out on 60 popular mobile games.

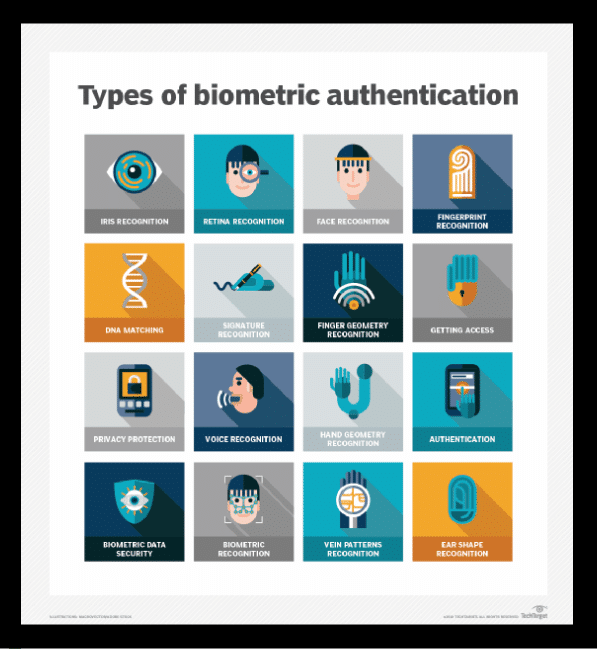

Biometrics Age Verification

Regtech and fintech have evolved, and digital identity verification solutions have been developed to help age-restricted businesses comply with new legislation. Biometrics technology offers businesses powerful tools to avoid legal issues, heavy fines, and reputational risk caused by exposing minors to age-restricted goods and services.

Biometric solution providers claim that their tools are 95%+ accurate and identify the person who wants to gain access to age-restricted content or services. These tools include identity and authentication solutions that provide companies with powerful tools to ensure children’s online safety. Typically, ID Verification tools extract and analyze personal information from a wide variety of government-issued photo IDs. Liveliness detection is an anti-Facial Spoofing solution that verifies whether the visitor imitates or uses another person’s face to trick the facial biometric identification control systems.

Types of Biometric Authentication

It is in the interest of age-restricted businesses to implement an age verification program to protect minors. You have a legal and moral obligation to verify the age of your clients while protecting your business and brand’s reputation.

In 2023, we will see regulators tighten their grip on all platforms that potentially expose children to harmful content, products, or services. Age-restricted businesses should get prepared and explore the many solutions that are available. Keeping up to date with the latest FDA or other regulators’ legal requirements, state laws, and merchant account policies is vital to your business. Merchants that sell age-sensitive products or services can choose to invest in expensive biometrics solutions or partner with a payment service provider, such as Segpay, that includes age verification solutions in its Value-Added Compliance Services (VAS).

Want to learn more about Biometrics Age Verfication?

If this article has made you curious and you want some free advice on how to protect your business from non-compliance with new age verification laws, feel free to contact one of our experts.

******

This article has been written by @SandeCopywriter on behalf of Segpay Europe